Networking

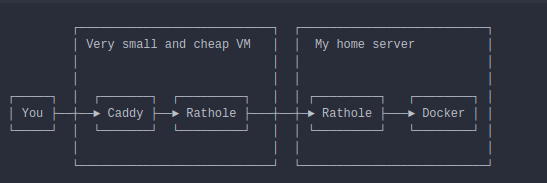

Here's a simple flow diagram about what a connection does until it hits the conainer that executes each service.

- Caddy for auto HTTPS. Caddy provides the equivalent to an cloud "application load balancer" service

- Rathole for NAT traversal. Rathole provides the equivalent to a cloud "network load balancer" service. Some services like SSH are exposed directly from the VM

- Docker and docker compose to run all services

Configuration

VM

We'll need to change the VM or the VPC firewall to allow ingress connections to ports 80, 443, 3389 (these usually come default), 7000, and 222.

Rathole

This is the server configuration

[server]

bind_addr = "0.0.0.0:7000"

[server.services.ssh]

token = "REDACTED"

bind_addr = "0.0.0.0:3389"

[server.services.web]

token = "REDACTED"

bind_addr = "127.0.0.1:7001"

[server.services.git]

token = "REDACTED"

bind_addr = "127.0.0.1:3000"

[server.services.gitssh]

token = "REDACTED"

bind_addr = "0.0.0.0:222"

And this is the client configuration

[client]

remote_addr = "lab.guillemborrell.es:7000"

[client.services.ssh]

token = "REDACTED"

local_addr = "127.0.0.1:22"

[client.services.web]

token = "REDACTED"

local_addr = "127.0.0.1:8000"

[client.services.git]

token = "REDACTED"

local_addr = "127.0.0.1:3000"

[client.services.gitssh]

token = "REDACTED"

local_addr = "127.0.0.1:222"

Caddy

lab.guillemborrell.es {

reverse_proxy localhost:7001

}

git.guillemborrell.es {

reverse_proxy localhost:3000

}

You probably get how to add an additional service with auto-http

SSH

Note that the gitssh services are forwarded by Rathole, not by Caddy. Of course we want to let the port 22 for admin purposes. Here's how the ssh config would look:

Host lab

User guillem

Port 3389

HostName lab.guillemborrell.es

FAQ

How small the small and cheap VM?

It can be the smallest instance. Half a virtual core and less than a GB of RAM will do. Caddy and Rathole are very efficient, and within normal operation, the VM has a CPU load of less than 1%. These VMs usually cost less than $5/month

Why Caddy on the cloud VM?

Certificate authorities require that the service that requests the cert runs in an IP related to a A or AAAA entry in an accessible DNS service. This is the way you prove that you "own" the service

Where's the static IP?

Servers don't rotate the IP while on. If you ever need to restart the VM, then just change the A records in the DNS configuration. Of course you can allocate a static IP for your VM, but it will be more expensive than the VM itself.

Storage

File storage

The lab runs an instance of minio that serves the files of a local folder using S3 semantics. Minio is really simple to deploy, and most projects use it to implement integration tests with S3.

RDBMS

There are multiple services in the lab that require a RDBMS. Postgresql is supported by all of them, so Postgresql it is. The usual practice when one deploys with docker compose is to create a separate database server for each service, but considering the capabilities of Postgresql, this is definitely an overkill. My decision has been to run postgres 14 on the server, and make it accessible to the containers by adding the following section in the docker compose file:

extra_hosts:

- host.docker.internal:host-gateway

This would be analogous to runing a managed DRBMS service, like Azure PostgreSQL or Aurora PostgreSQL. This means that one has to manage database creation, accounts, and passwords separately. This is how the database looks after deploying the whole thing:

~$ sudo -u postgres psql postgres

[sudo] password for guillem:

could not change directory to "/home/guillem": Permission denied

psql (14.4 (Ubuntu 14.4-0ubuntu0.22.04.1))

Type "help" for help.

postgres=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

------------+----------+----------+-------------+-------------+-----------------------

ci | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

dw | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =Tc/postgres +

| | | | | postgres=CTc/postgres+

| | | | | dw=CTc/postgres

gitea | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

jupyterhub | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

metabase | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

(8 rows)

Since the database that the services use for their operation is external to docker, the deployment of the services can be split in several docker compose files. This allows to create an initial docker compose deployment that implement the basic gitops capabilities, and then leverage this initial deployment to put the rest of the services online with a CI/CD pipeline.

Services

Gitops

The base deployment includes gitea and Woodpecker CI. Gitea provides a plethora of services that we will leverage in the lab:

- Source code repository

- Wiki

- Authentication

- Webhooks for CI/CD

- Package and container image registry (from version 0.17, which was kind of fortunate)

Aditionally, Woodpecker provides

- CI/CD capabilities, with custom runners

- Deployment secret management

With this set of features, one can implement a fully-capable gitops base system.

Development environment

BI and visualization

Backups

There's a specific page about backups here

Index

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International